Convert Pytorch model to ONNX#

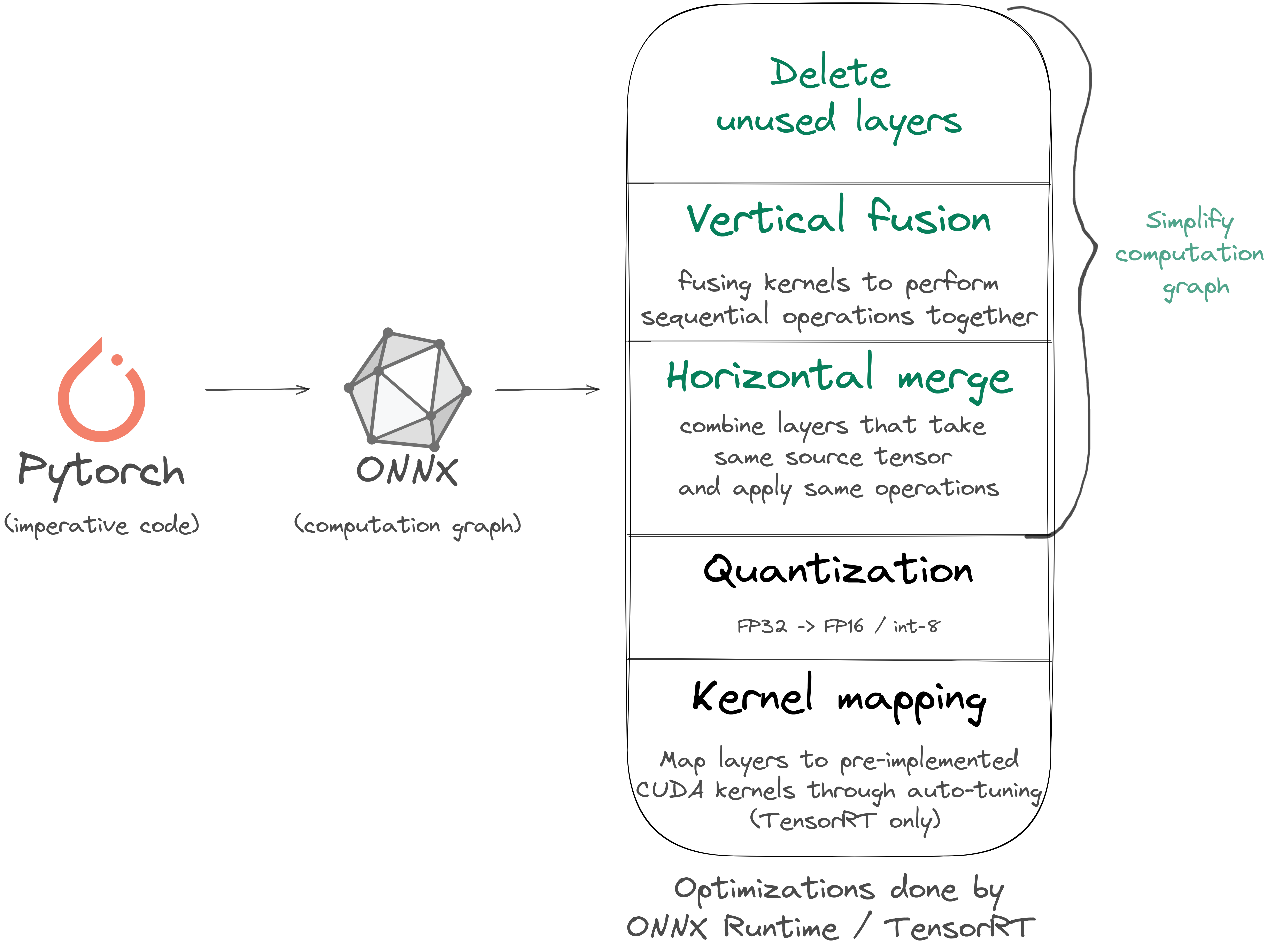

To ease optimization we need to convert our Pytorch model written in imperative code in a mostly static graph.

Therefore, optimization tooling will be able to run static analysis and search for some pattern to optimize.

The target graph format is ONNX.

from https://onnx.ai/

ONNX is an open format built to represent machine learning models. ONNX defines a common set of operators — the building blocks of machine learning and deep learning models — and a common file format to enable AI developers to use models with a variety of frameworks, tools, runtimes, and compilers.” (https://onnx.ai/). The format has initially been created by Facebook and Microsoft to have a bridge between Pytorch (research) and Caffee2 (production).

There are 2 ways to perform an export from Pytorch:

tracing mode: send some (dummy) data to the model, and the tool will trace them inside the model, that way it will guess what the graph looks like;scripting: requires the models to be written in a certain way to work, its main advantage is that the dynamic logic is kept intact but adds many constraints in the way models are written.

Attention

Tracing mode is not magic, for instance it can’t see operations you are doing in numpy (if any), the graph will be static, some if/else code is fixed forever, for loop will be unrolled, etc.

Hugging Face and model authors took care that main/most models are tracing mode compatible.

Following commented code performs the ONNX conversion:

Note

One particular point is that we declare some axis as dynamic.

If we were not doing that, the graph would only accept tensors with the exact same shape that the ones we are using to build it (the dummy data), so sequence length or batch size would be fixed.

The name we have given to input and output fields will be reused in other tools.

A complete conversion process in real life (including TensorRT engine step) looks like that: